First of all, What is a Load Balancer

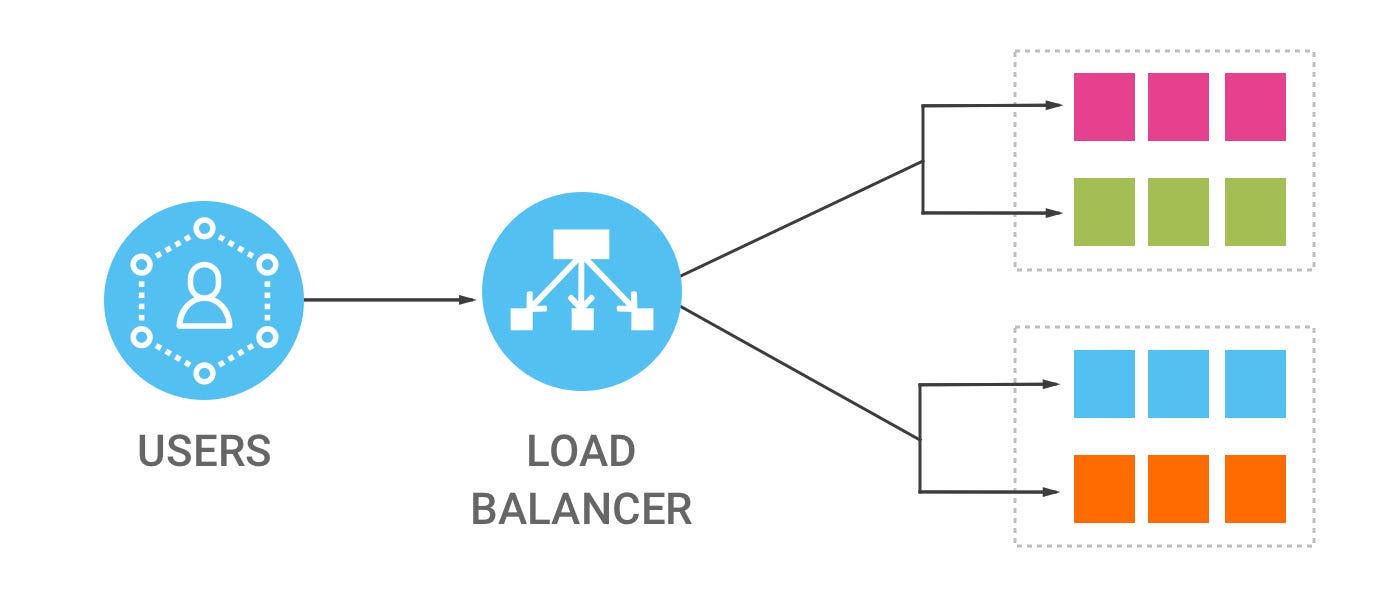

A load balancer can be deployed as software or hardware to a device that distributes connections from clients between a set of servers. A load balancer acts as a ‘reverse-proxy’ to represent the application servers to the client through a virtual IP address (VIP). This technology is known as server load balancing (SLB). SLB is designed for pools of application servers within a single site or local area network (LAN).

Load balancers are used to provide availability and scalability to the application. The application can scale beyond the capacity of a single server. The load balancer works to steer the traffic to a pool of available servers through various load balancing algorithms. If more resources are needed, additional servers can be added.

Load balancers health check the application on the server to determine its availability. If the health check fails, the load balancer takes that instance of the application out of its pool of available servers. When the application comes back online, the health check validates its availability and the server is put back into the availability pool.

Because the load balancer is sitting in between the client and application server and managing the connection, it has the ability to perform other functions. The load balancer can perform content switching, provide content-based security like web application firewalls (WAF), and authentication enhancements like two factor authentication (2FA).

How does a load balancer work?

A load balancer is a reverse proxy. It presents a virtual IP address (VIP) representing the application to the client. The client connects to the VIP and the load balancer makes a determination through its algorithms to send the connection to a specific application instance on a server. The load balancer continues to manage and monitor the connection for the entire duration.

Imagine a sports agent negotiating a new contract for a star athlete. The agent takes the request from the athlete and sends it to a specific interested team. The team responds with information (an offer) which the agent then passes back to the client. This goes on for a while until a resolution is reached.

This is the primary function of the load balancer, server load balancing (SLB). The agent can provide additional functionality based on their role in the conversation. They can decide to allow and/or deny certain details (security). They may want to validate that the person they are talking to is actually the athlete in question (authentication). If the current sports league is not working out, the agent can send the discussions to a different league based on availability or location (GSLB).

What types of load balancers are out there?

To understand the types of load balancers, one needs to understand the history.

Network Server Load Balancers

Load balancers entered the market in the mid-1990s to support the surge of traffic on the internet. Load balancers had basic functionality designed to pool server resources to meet this demand. The load balancer managed connections based on the packet header. Specifically, they looked at the 5-tuple – source IP, destination IP, source port, destination port, and IP protocol. This is the entry of the network server load balancer or Layer 4 load balancer.

Application Load Balancers

As technology evolved, so did the load balancers. They became more advanced and started providing content awareness and content switching. These load balancers looked beyond the packet header and into the content payload. These load balancers look at the content such as the URL, HTTP header, and other things to make load balancing decisions. These are the application load balancers or Layer 7 load balancers.

Global Server Load Balancing

Global server load balancing (GSLB) is actually a different technology than the traditional layer 4-7 load balancer. GSLB is based on DNS and acts as a DNS proxy to provide responses based on GSLB load balancing algorithms in real time. It is easiest to think of GSLB as a dynamic DNS technology that manages and monitors the multiple sites through configurations and health checks. Most load balancing solutions today offer GSLB as a component of their functionality.

Hardware vs Software vs Virtual Load Balancing

Load balancers originated as hardware solutions. Hardware provides a simple appliance that delivers the functionality with a focus of performance. Hardware-based load balancers are designed for installation within datacenters. They are turn-key solutions that do not require the dependencies that software-based solutions require such as hypervisors and COTS hardware.

As network technologies evolved, software-defined, virtualization, and cloud technologies have become important. Software-based load balancing solutions offer flexibility and the ability to integrate into the virtualization orchestration solutions. Some environments such as cloud require software solutions. Software-based environments often use DevOps and/or CI/CD processes. The software load balancer is more suited for these environments with their flexibility and integration.

Elastic Load Balancers

Elastic Load Balancer (ELB) solutions are far more sophisticated and offer cloud-computing operators scalable capacity based on traffic requirements at any one time. Elastic Load Balancing scales traffic to an application as demand changes over time. It also scales load balancing instances automatically and on-demand. As elastic load balancing uses request routing algorithms to distribute incoming application traffic across multiple instances or scale them as necessary, it increases the fault tolerance of your applications.

How does a load balancer distribute client traffic across servers?

There are numerous techniques and algorithms that can be used to intelligently load balance client access requests across server pools. The technique chosen will depend on the type of service or application being served and the status of the network and servers at the time of the request. The methods outlined below will be used in combination to determine the best server to service new requests. The current level of requests to the load balancers often determines which method is used. When the load is low then one of the simple load balancing methods will suffice. In times of high load, the more complex methods are used to ensure an even distribution of requests.

Load Balancing Techniques:

Round Robin

Round-robin load balancing is one of the simplest and most used load balancing algorithms. Client requests are distributed to application servers in rotation. For example, if you have three application servers: the first client request to the first application server in the list, the second client request to the second application server, the third client request to the third application server, the fourth to the first application server and so on.

This load balancing algorithm does not take into consideration the characteristics of the application servers i.e. it assumes that all application servers are the same with the same availability, computing and load handling characteristics.

Weighted Round Robin

Weighted Round Robin builds on the simple Round-robin load balancing algorithm to account for differing application server characteristics. The administrator assigns a weight to each application server based on criteria of their choosing to demonstrate the application servers traffic-handling capability. If application server #1 is twice as powerful as application server #2 (and application server #3), application server #1 is provisioned with a higher weight and application server #2 and #3 get the same weight. If there five (5) sequential client requests, the first two (2) go to application server #1, the third (3) goes to application server #2, the fourth (4) to application server #3 and the fifth (5) to application server #1.

Least Connection

Least Connection load balancing is a dynamic load balancing algorithm where client requests are distributed to the application server with the least number of active connections at the time the client request is received. In cases where application servers have similar specifications, an application server may be overloaded due to longer lived connections; this algorithm takes the active connection load into consideration.

Weighted Least Connection

Weighted Least Connection builds on the Least Connection load balancing algorithm to account for differing application server characteristics. The administrator assigns a weight to each application server based on criteria of their choosing to demonstrate the application servers traffic-handling capability. The LoadMaster is making the load balancing criteria based on active connections and application server weighting.

Resource Based (Adaptive)

Resource Based (Adaptive) is a load balancing algorithm requires an agent to be installed on the application server that reports on its current load to the load balancer. The installed agent monitors the application servers availability status and resources. The load balancer queries the output from the agent to aid in load balancing decisions.

Resource Based (SDN Adaptive)

SDN Adaptive is a load balancing algorithm that combines knowledge from Layers 2, 3, 4 and 7 and input from an SDN Controller to make more optimized traffic distribution decisions. This allows information about the status of the servers, the status of the applications running on them, the health of the network infrastructure, and the level of congestion on the network to all play a part in the load balancing decision making.

Fixed Weighting

Fixed Weighting is a load balancing algorithm where the administrator assigns a weight to each application server based on criteria of their choosing to demonstrate the application servers traffic-handling capability. The application server with the highest weigh will receive all of the traffic. If the application server with the highest weight fails, all traffic will be directed to the next highest weight application server.

Weighted Response Time

Weighted Response Time is a load balancing algorithm where the response times of the application servers determines which application server receives the next request. The application server response time to a health check is used to calculate the application server weights. The application server that is responding the fastest receives the next request.

Source IP Hash

Source IP hash load balancing algorithm that combines source and destination IP addresses of the client and server to generate a unique hash key. The key is used to allocate the client to a particular server. As the key can be regenerated if the session is broken, the client request is directed to the same server it was using previously. This is useful if it’s important that a client should connect to a session that is still active after a disconnection.

URL Hash

URL Hash is a load balancing algorithm to distribute writes evenly across multiple sites and sends all reads to the site owning the object.