Posted on July 29, 2019

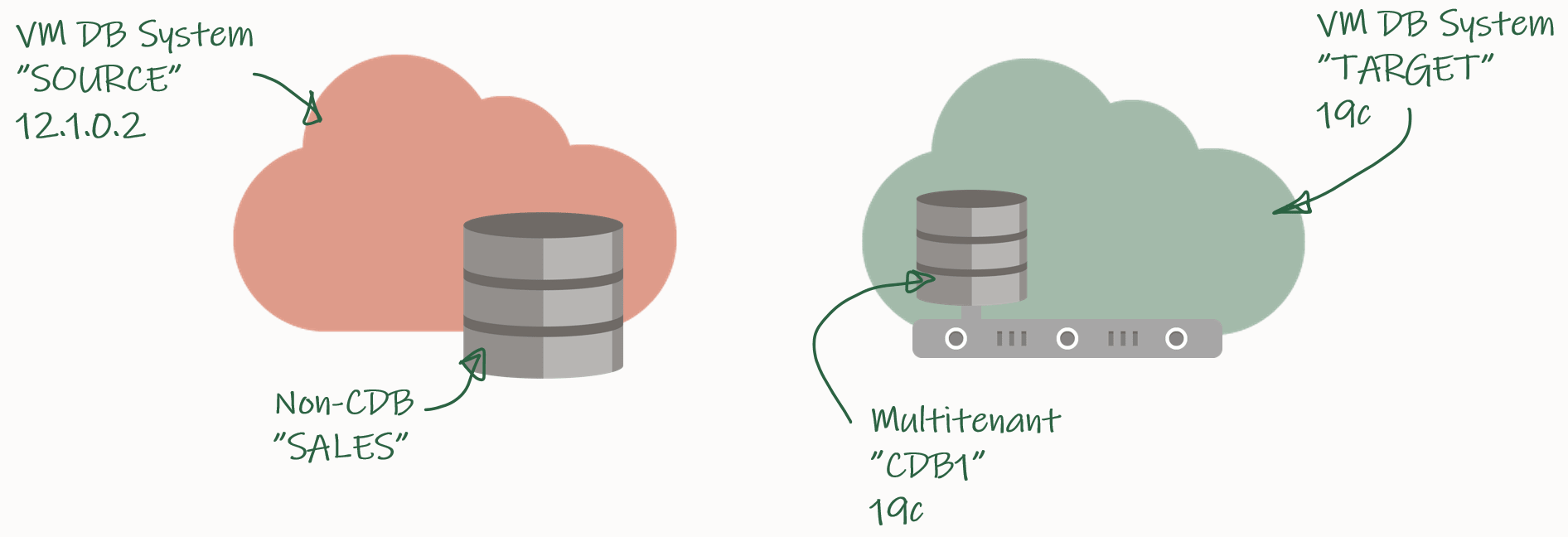

You may have realized that there are a few techniques missing describing how to do a Database Migration from non-CDB to PDB – Migration with Data Pump is one of them. I will explain the most simple approach of going to Single- or Multitenant. It isn’t the coolest – and it isn’t very fast as soon as your database has a significant size. But it is not complex. And it allows you to move even from very old versions directly into an Oracle 19c PDB – regardless of patch levels or source and destination platform.

High Level Overview

| Endianness change possible: | Yes |

| Source database versions: | Oracle 10.1.0.2 or newer |

| Characteristic: | Direct migration into PDB |

| Upgrade necessary: | No, happens implicitly |

| Downtime: |

Migration – mainly depending on size and complexity |

| Minimal downtime option(s): | Oracle GoldenGate |

| Process overview: | Export from source, import into destination – either via dump file or via Database Link |

| Fallback after plugin: |

Data Pump – optional: Oracle GoldenGate |

Database Migration from non-CDB to PDB – Migration with Data Pump

Well, I think I don’t need to explain Oracle Data Pump to anybody. At the end of this blog post you will find a long list of links pointing to the documentation and various workarounds. The big advantages of using Data Pump to migrate from a non-CDB into a PDB are:

- Works with every version since Oracle 10.1.0.2

- Works regardless of patch level

- Does not require any upgrade

- Works across all platforms

- Works regardless of encryption

- Allows multiple transformations

But the disadvantages of Data Pump are obvious as well as the duration depends mostly on:

- Amount of data

- Complexity of meta information

- Special data types such as LONG and LOB

I’d call Data Pump the most flexible approach but of course potentially also the slowest of all options.

Process Overview

Using Data Pump either allows you to export into a dump file, and import from this dump file afterwards.

Or you setup a database link from destination to source, and run the import from the destination of the database link using the NETWORK_LINK parameter.

The advantage of using a database link is not-writing a dump file which does not need to be copied over. But not all actions can run in parallel. Plus, not every data type is supported (LONG for instance until 12.2). And your limiting factor is always the source side as Data Pump implicitly calls expdp on the source side. To my experience this can be faster but especially when you work on the same storage or SAN, and you don’t have to move the dump file around, the first approach often works better.

Some Best Practices

There are some recommendations for both approaches:

- Always use a par file

- For a consistent export, use either

FLASHBACK_TIME=SYSTIMESTAMPorCONSISTENT=Y(since 11.2) - Always

EXCLUDE=STATISTICS– regather stats in destination is faster, or transport with aSTATStable fromDBMS_STATS - Set

METRICS=Yand since 12.1,LOGTIME=ALL - Use

PARALLEL=<2x number of cpu cores>- Since Oracle 12.2, meta data gets exported in parallel – but not with

NETWORK_LINK

- Since Oracle 12.2, meta data gets exported in parallel – but not with

- Preallocate

STREAMS_POOL_SIZE=128M(or in the range of 64M-256M) - BasicFile LOB (old 8i LOBs) are always slow

- Use

LOB_STORAGE=SECUREFILEto convert to SecureFile LOBs as part of the migration

- Use

Fallback

When you used Data Pump as a migration approach to move from non-CDB to PDB, then I don’t expect you to force a fast fallback scenario in case of failure. The important task is to use the VERSION parameter correctly when you export from the destination PDB. You need to set it to the source’s release in order to export in the format and with the contents, the (old) source will understand when you reimport. Make sure, there’s an empty database waiting in case fallback is important. And don’t cleanup your old source home too early.

Be aware of one major pitfall: The time zone version. As typically your source database has a lower time zone version than the destination, you can migrate “forward” (same or higher version) but not “backwards” (lower version). Hence, in case of fallback you most likely need to apply a DST Time Zone patch to the older home in order to allow Data Pump to import. And make sure you follow the supported configurations setup from MOS Note:553337.1 carefully.

Unfortunately, the fallback strategy over a NETWORK_LINK does not work. Even though the below scenario looks promising, you’ll receive an error when you call imdp from the lower version over the DB Link. I’d assume that the VERSION parameter does not get propagated in a way to convince the expdp side to export in 11.2.0.4 format.

This will be the error you’ll receive:

$ impdp system/oracle@ftex network_link=sourcedb version=11.2.0.4 tables=tab1 metrics=y exclude=statistics directory=mydir logfile=pdb2.log Import: Release 11.2.0.4.0 - Production on Wed Aug 14 20:27:52 2019 Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, OLAP, Data Mining and Real Application Testing options ORA-39001: invalid argument value ORA-39169: Local version of 11.2.0.4.0 cannot work with remote version of 19.0.0.0.0.